Table of Content

If you’re an SMB, you’ll agree that things get stretched quickly, whether managing internal ops, handling customer service, or keeping up with lead generation.

It’s no surprise you’re searching for ways to do more with less—less time, less overhead, and fewer hands on deck. Imagine having a group of AI agents, each trained for a specific job, working together behind the scenes.

They can research, summarize, calculate, and respond quickly, working like a well-coordinated team that never sleeps (or makes a mistake). Here’s the brilliant part: this type of multi-agent AI setup isn’t some futuristic concept; it’s the dominant innovation narrative for 2025.

According to Constant Contact, 91% of AI users say it has helped make their business more successful, and 60% reported saving time and greater efficiency. So, which are the best AI agent frameworks to work with here?

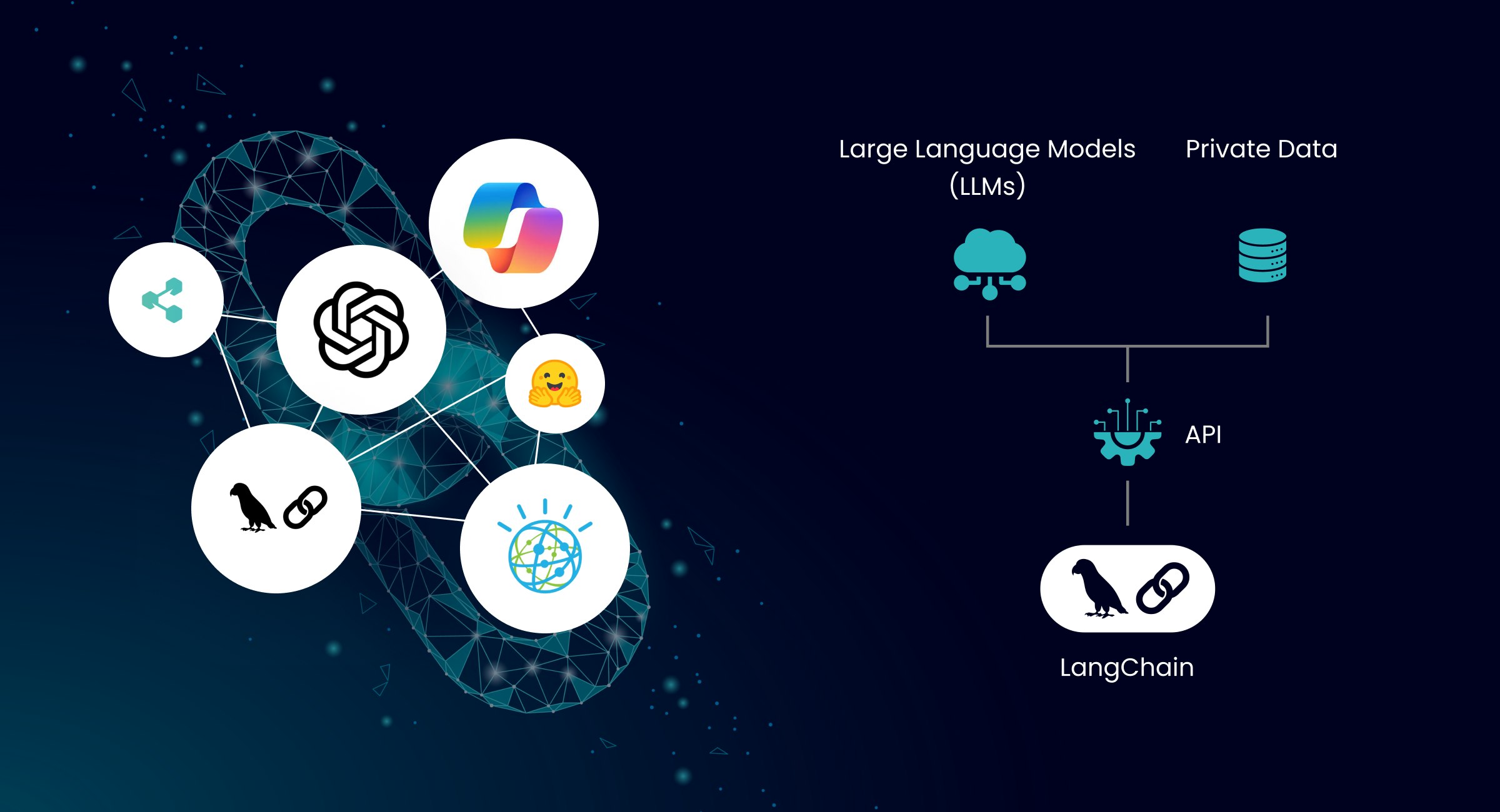

LangChain is one of them.

It’s an open-source framework that connects large language models (LLMs) to your business’ private data and APIs to build context-aware, reasoning apps, such as AI agents and chatbots.

LangChain doesn’t restrict users to a single black-box cognitive architecture but rushes from prototype to production with popular methods like simple chains or RAG. Of course, designing a system like this takes more than plugging in a few prompts.

That’s where Intuz can help. In this blog post, we’ll dissect the steps you need to take to build multi-AI agent workflows with LangChain. You’ll also find valuable examples and technologies you can apply to make a difference from day one.

7 Steps to Build a Multi-AI Agent Workflow Using LangChain

1. Define business use cases and agent roles

First things first: get clarity on where AI can help you. You don’t need to consider automating everything just yet—maybe parts of the workflow that take up too much of your time, are repetitive, and tend to fall through the cracks, such as:

- Drafting content or summarizing long documents

- Extracting insights from feedback, surveys, or emails

- Drafting offer letters or rejection emails automatically

- Reconciling invoices and flagging anomalies in financial systems

- Providing instant answers to FAQs across chat, email, and social channels

Once you’ve picked the use case, break it down into smaller steps. Each of those can become a role for an agentic AI workflow. Let’s say you’re automating client onboarding:

- One agent gathers client info from forms or emails

- Another summarizes key details into a CRM-ready format

- A third drafts a welcome email personalized to the client’s industry

- A fourth checks for missing info and requests it if needed

You already know your processes. Give the right jobs to the right agents.

Top 10 AI Agent Development Companies in USA [2025]

Explore Now

2. Select the AI model and LangChain components

Once you’ve mapped out your use case and defined the roles of your agents, it’s time to get down to brass tacks: choose the right language model. Depending on the task demands, your decision should factor in cost, speed, and capability.

Here’s how to think about it with examples:

- Cost: If your app involves frequent or high-volume usage, like a customer support chatbot, opt for a cost-efficient model, like GPT-3.5 Turbo or Cohere Command R

- Speed: If your app relies on real-time interactions, such as autocomplete in a coding assistant or a live tutoring tool, you’ll want a fast-response model, such as Claude Sonnet or Mistral Instruct (open-source)

- Capability: If your agents need to reason, summarize, or work with complex or lengthy documents, you’ll need a more advanced model, like Claude 3 Opus or GPT-4 Turbo

Now for the LangChain building blocks, which are especially useful for multi-AI agent AI systems:

a. Agents

These are your core units. You can use “ReAct” for step-by-step reasoning or “OpenAIFunctionsAgent” for calling APIs and tools.

b. Tools

Agents can use tools like calculators, web searches, or custom connectors for external systems—think of them as utility belts.

c. Memory

You can give agents short-term memory (to keep track of a conversation) or long-term memory (to recall past context across sessions).

d. Chains

These help structure workflows by connecting agents in a sequence or branching logic. A chain decides what happens next, based on what just happened.

Remember: Every project looks a little different. But for SMBs, our AI agent development company has found a few combinations that balance performance and simplicity well.

3. Design an agent communication architecture

This part shapes the entire flow of your system. If you keep it clean and straightforward, everything runs more smoothly. There are a few ways to structure agent communication:

- Parallel: Multiple agents work at the same time on different parts of a task, then combine their outputs

- Sequential: One agent finishes, then hands off to the next

- Hierarchical: A manager agent delegates tasks to specialized agents, checks results, and decides what to do next

To manage these flows, LangChain offers tools like RouterChain and MultiPromptChain. Based on the context, these help route the conversation or task to the right agent.

A good practice here is to design for lightweight communication. Let agents share only what’s needed to keep things moving. This avoids bottlenecks and sharpens performance, particularly when running multiple agents together.

4. Implement workflow orchestration

This is the step where everything comes to life, ensuring the right agent gets the right task at the right time. Here, you deploy three main LangChain components, including:

- AgentExecutor: Runs agents with the tools and logic they need

- RouterChain: Directs tasks to different agents based on context

- MultiPromptChain: Lets you switch between prompts or agent behaviors dynamically

For example, if you’re a small marketing agency and you want to automate content ideation for clients, you’d typically create a workflow like this:

- An agent gathers brand details from a database

- Another agent pulls recent industry trends via a web search tool

- A third agent generates three campaign ideas tailored to the client

- One more agent formats the ideas into a short slide deck or email draft

This whole thing could run in the background after a client call, preparing your team for the next day with real-time information.

5. Deploy memory and long-term context

Remember how ChatGPT remembers your conversation with it? That’s memory helping it hold on to past context, remember details across steps, and create more natural interactions. You can do the same with your AI agents.

LangChain gives you several memory types to choose from for your AI agents for business automation:

- ConversationBufferMemory: Stores recent conversation history so that agents can stay consistent during a task or session

- SummaryMemory: Keeps a running summary of key points for more intelligent automation

- Vector DB-backed Memory: Lets agents search through larger volumes of past data, like previous chats, documents, or customer records, for a personalized conversation

With this technology in place, they can simply recall what was already said, avoid repeating questions, and build on previous insights—ideal for customer-facing roles, ongoing analysis, or any task that involves multiple steps or sessions.

6. Develop, test, and debug iteratively

Once your LangChain-oriented multi-agent system is mapped out, it’s time to test it. Pick a small part of the workflow; just two or three agents, solving a specific task. This lets you test fundamental interactions early, without getting lost in complexity.

LangChain gives you a few tools to help with multi-modal AI development and debugging:

- Verbose mode: Figure out exactly what’s happening inside the agent as it runs

- Callbacks: Track each step in the workflow and understand how agents are using tools

- LangSmith (if you’re using it): A powerful tool for testing and observing your workflows with more control and insights

When it comes to testing, a layered approach works best:

- Unit testing: Make sure each tool or custom function behaves correctly

- Integration testing: Run workflows end-to-end to identify if agents are handing off information properly

- User testing: Find out how your team or customers experience the system

This is where having the right AI development company makes a big difference. With Intuz, you get support not just during build-out, but also through testing and iteration. You move faster, avoid common traps, and get a system that fits your business.

7. Optimize and scale the system

Once your workflow is running and delivering results, make it faster, more efficient, and ready to grow with your business.

A few key metrics to watch:

- Latency: How long does each agent take to respond

- Token usage: Which parts of the workflow are using the most language model resources

- Cost: Total spend per run if you’re using paid APIs or advanced LLMs

Even minor tweaks here can lead to significant gains in the future.

There are also several ways to scale without losing efficiency:

- Horizontal scaling: Run multiple workflows at once to handle higher volumes

- Caching: Store results of common queries or repeated tasks, so agents don’t have to recalculate every time

- Async execution: Use LangChain’s “RunnableParallel” or “RunnableSequence” to run agents simultaneously or streamline handoffs

When it’s time to take your system live, you have options. You can deploy it through serverless APIs for flexibility, or use containerized microservices for more control and performance.

And here’s where post-deployment matters. Once your agents run in the real world, you’ll notice patterns. Our AI agent development company can help you track and respond to those changes over time with performance tuning, model updates, and new features.

Take an AI-First Approach Grounded in Real Business Outcomes

LangChain is a flexible, scalable foundation, but expert implementation is key. That’s where Intuz steps in. Using this particular framework, we can provide end-to-end custom AI solutions tailored to specific business use cases.

Our AI agent development services can also address complex integration challenges, ensuring seamless connectivity between AI agents, data sources, and existing systems for optimal performance.

Our team works in short, focused cycles so you can see value early without locking into a rigid plan, minus surprise overheads.

Book a 15-minute discovery call (yes, only a 15-minute call) with us to find out more.