Table of Content

Imagine a potential customer landing on your travel platform or app and typing something simple like: “I want a beach holiday in June, somewhere quiet, not too expensive, with good food and a direct flight from Chicago.”

Ideally, your system should allow the customer to choose a destination, apply filters, open several tabs, compare prices, and repeat the process until they stumble onto something that feels right. But here’s the thing:

Static search, generic recommendations, and endless browsing only negatively affect the experience customers want.

And since AI-powered travel software took 69% of the market’s revenue share in 2024, it’s fair to say that AI can elevate this game by a large margin.

In this blog, we uncover ways to integrate an AI-powered trip search and recommendation engine into your existing travel platform. But first, let’s get something straight.

AI Solutions Intuz Delivers for Travel Businesses

1. Natural language queries

Your customers type or speak in plain language. The model interprets these prompts using a combination of:

- Intent detection (trip type, purpose, preferences)

- Entity extraction (dates, destinations, budget, duration, group size)

- Constraint parsing (flight availability, max travel time, mobility needs)

This can be done using LLMs for open-ended prompts or dedicated NLU pipelines (spaCy, Transformers, or custom classifiers).

2. AI travel recommendation engine

Personalization is driven by user embeddings and behavioural signals, such as browsing and search patterns, engagement depth, and historical bookings. Then, recommendations are generated via:

- Re-ranking models

- Content-based filtering

- Collaborative filtering

3. Multi-agent orchestration layer

Complex queries often require multiple AI components running in parallel. A lightweight orchestrator coordinates tasks handled by:

- NLP/intent agent

- Inventory lookup agent

- Pricing evaluation agent

- Itinerary assembly agent

- Destination scoring agent

Each module returns structured outputs, which the orchestrator aggregates before sending results back to the user.

4. Context-aware pricing engine

Pricing intelligence combines business rules and Machine Learning (ML)-based scoring. The final results are sorted by value fit, not just by price, so that the customer can see the most relevant options first.

How to Integrate an AI-Powered Recommendation Engine into Your Travel Platform

1. Define recommendation goals and gather data

Start by being clear about what you want the recommendation engine to optimize.

Do you want to:

- Improve how relevant the recommendations are?

- Reduce time from search to booking?

- Increase average order value

- Increase booking conversion?

Based on that, identify the data types required to support them. For example:

- User data for relevance and personalization

- Interaction data for learning the actual user intent over time

- Content data for matching destinations/hotels to preferences

- Transaction data for pricing and availability-aware recommendations

Don’t forget to map each goal to clear KPIs and define how they’ll be measured. Without measurement rules, you won’t know whether the model is actually improving booking performance or simply producing “better-looking” suggestions.

Here’s a quick breakdown you can use:

| Goal | KPIs to track |

|---|---|

| Improve relevance of recommendations | CTR on recommended items |

| Reduce time from search to booking | Average search → booking duration |

| Increase booking conversion | Search → booking conversion rate |

| Increase average order value | Average booking value |

Intuz Recommends

With your goal(s) and datasets locked in, finalize all formats, such as currencies, date formats, airline codes, property attributes, and hotel tags, so that they follow a uniform structure. Remove incomplete records, conflicting attributes, and duplicate entries to ensure the model learns from reliable information.

2. Select AI tools and technologies

This step determines how easily your engine will scale as your inventory, destinations, and user base grow. The table gives you a clear view of what works for a travel platform depending on your constraints.

| Platform | Best For | Pros | Cons | Cost |

|---|---|---|---|---|

| OpenAI / LLM APIs | Natural-language queries, intent detection, query rewriting | High accuracy, very fast to implement, ideal for complex prompts | API dependency, limited control over model behaviour | Usage-based (scales with traffic) |

| Hugging Face Transformers | Custom NLU models, embeddings, offline inference | Full control, tunable, large model library | Requires ML engineering + infra | Infra + compute costs |

| TensorFlow / PyTorch | Building custom ranking, scoring, hybrid recommenders | Fine-grained control, production-ready frameworks | Steeper learning curve, full responsibility for training & inference | Infra + engineering costs |

| Google Vertex AI | End-to-end ML pipelines, automated training / retraining | Managed MLOps, scalable, reduces ops overhead | Ties you into Google ecosystem | Platform + compute |

| Elasticsearch / OpenSearch | Fast search, filtering, destination scoring | Extremely fast retrieval, simple to integrate, works well with metadata | Not sufficient for personalization on its own | Low to moderate |

| FAISS / Pinecone | Vector search for similarity-based travel recommendations | High-performance vector retrieval, ideal for embeddings | Needs optimized data pipeline; Pinecone adds external dependency | FAISS free; Pinecone usage-based |

Intuz Recommends

We’re often asked by our clients the best way to choose the toolkit based on the use case. The key is to use a simple rule to define a clear decision path based on the team’s capabilities and constraints.

For example:

- If you want a quick deployment → OpenAI + Elasticsearch hybrid

- If you wish to scale pipelines → Vertex AI or AWS Sagemaker

- If you want maximum control → Hugging Face + PyTorch

- If you want a fast similarity search → Pinecone or FAISS

Whichever AI tools and technologies you choose, ensure they support fast embedding updates, low-latency retrieval, and the ability to re-rank results in real time.

3. Build the core recommendation engine

This is where you assemble the pipeline that takes user context, matches it with your inventory, and outputs ranked trip options:

- A data pipeline that prepares features

- A search layer that retrieves viable candidates

- A recommendation layer that ranks those candidates intelligently

Convert your cleaned data into model-ready features. Generate user and item attributes, create embeddings where helpful, and structure interaction data for training ranking or recommendation models.

Then prepare train–validation splits with a time-based holdout window to evaluate the system under conditions that reflect real booking behavior.

Next, develop the search component, which answers a simple question: “Given this user and query, what could we show?”

Its job is to handle fast retrieval using a mix of basic filters (dates, origin city, budget, trip length), vector search for similarity, and business rules that exclude sold-out properties or irrelevant regions. The output is a manageable candidate pool of 100–500 trips or properties.

Once you have a valid candidate pool, the next task is ranking: “Out of all valid matches, what should appear first?” Travel platforms generally progress through three recommendation approaches as they mature:

Intuz Recommends

A proven approach for travel platforms is to start with content-based filtering when user history is limited, then layer in collaborative filtering as booking and interaction data grows. It’s only when both elements are available that you should introduce a learning-to-rank model that optimizes for your primary KPI (e.g., booking conversion or CTR).

4. Integrate the engine with your existing stack

Now, integration happens across three layers:

a. Backend

The recommendation engine should run as a stable internal service that other components can call reliably. In most architectures, this takes the form of a dedicated microservice that runs independently while communicating with your main application via clear, predictable APIs.

The setup then:

- Exposes REST or GraphQL endpoints such as:

- POST /recommendations/trips

- POST /recommendations/hotels

- POST /search/query

Your platform sends requests to these endpoints with the necessary context, including the user ID or session ID, the parsed query or applied filters, and any additional information such as the origin city, device type, or locale.

The engine then returns a structured response containing a ranked list of items, such as destinations or properties, along with their scores, IDs, and optional explanation metadata.

b. Frontend

On the front end, the key is to surface AI-driven results incrementally without redesigning the UI. Common integration patterns include:

- Adding an “Ask anything” conversational search field

- Introducing “Recommended for you” modules on:

- Home page

- Destination pages

- Search results

- Dynamically ranking destinations or properties using model scores

- Showing itinerary suggestions alongside traditional search results

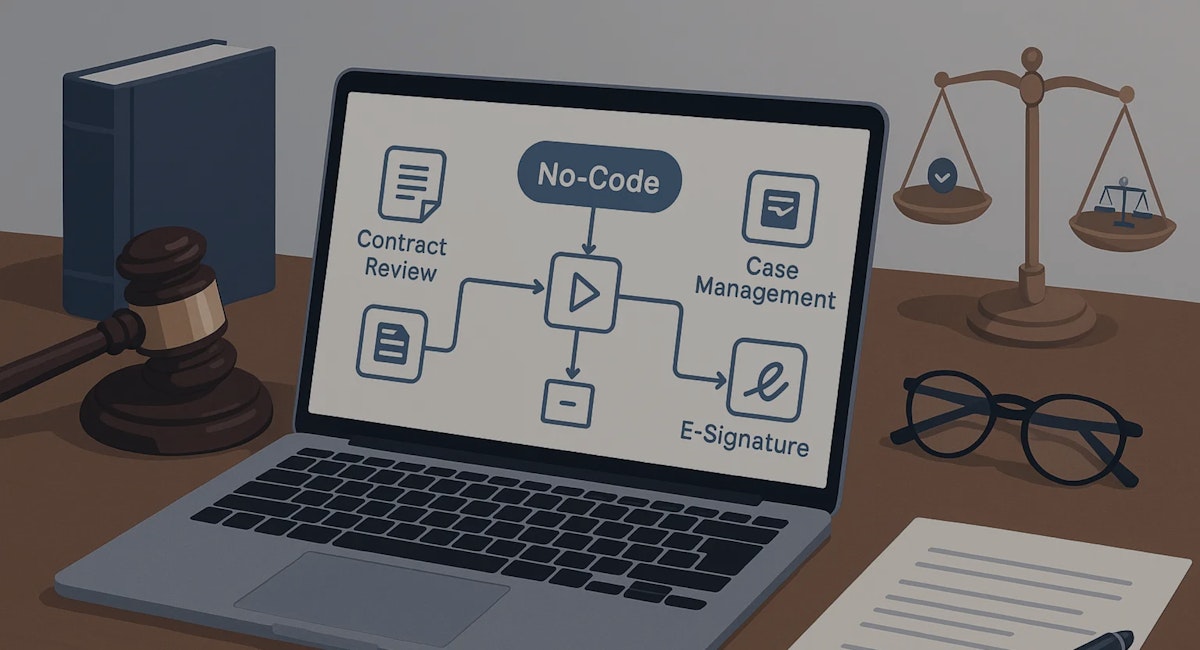

c. Third-party APIs and inventory

Most travel platforms rely on external suppliers such as GDS systems, OTA feeds, and airline or hotel APIs. Your recommendation engine should work on top of these sources. Here’s what a typical flow looks like:

Intuz Recommends

- Use personalization output only where it influences what the customer chooses. Look for high-impact placements like “Recommended for you” modules, destination rankings, and itinerary suggestions.

- Add simple, contextual nudges, like “Trips similar to your last booking” or “Short breaks from [origin city] this weekend” to guide toward relevant options without overwhelming the UI.

5. Test, launch, and optimize the engine

Before you roll out the recommendation engine, validate its behavior and release it gradually to ensure stability under real traffic.

First, run functional tests using mocked NLP outputs, supplier APIs, and ranking components to confirm each part behaves as expected and handles incomplete or ambiguous inputs.

Then test the full pipeline end-to-end, from NLP to candidate generation and ranking, to confirm the engine responds reliably under live-like conditions. Once the travel platform behaves consistently, move into controlled A/B testing.

Expose a small percentage of traffic to the AI-driven experience and compare it against your existing search flow. Track metrics like CTR, booking intent actions, conversion uplift, latency, and drop-off rates to determine whether the AI stack improves performance.

Intuz Recommends

- Once your A/B test with 10% of traffic shows a meaningful lift over basic search, increase traffic gradually and activate your launch safeguards – rate-limit external API calls, fall back to manual search if the AI fails, and keep KPIs under watch.

- From there, retrain your models weekly with new behaviour and inventory data, and aim for consistent gains in your core metric, such as a 15%+ booking uplift.

Why Partner with Intuz for AI-powered Recommendation Engine Development

If you’re thinking about adding AI-driven search or recommendations to your travel platform, then the real question is how to build an engine that performs reliably with your inventory, your workflows, and your commercial goals. That’s where Intuz steps in.

We focus on the engineering realities of AI in travel: structuring fragmented supplier data, building stable feature pipelines, designing ranking logic that aligns with your revenue model, and integrating the engine without disrupting your current stack.

Our team has worked with enough multi-layered AI architectures to know what breaks under real traffic and what holds up. That experience shapes every build we take on.

More importantly, we approach these projects as partners, not vendors. We collaborate closely with your product and engineering teams, ensure you retain ownership of the system, and design every component so you can evolve it long after the initial deployment.

If you want practical clarity on how AI can work inside your business, book a free consultation with us today.

About the Author

Kamal Rupareliya

Co-Founder

Based out of USA, Kamal has 20+ years of experience in the software development industry with a strong track record in product development consulting for Fortune 500 Enterprise clients and Startups in the field of AI, IoT, Web & Mobile Apps, Cloud and more. Kamal overseas the product conceptualization, roadmap and overall strategy based on his experience in USA and Indian market.